by Whitney Webb at Unlimited Hangout

In mid-February, Daniel Baker, a US veteran described by the media as “anti-Trump, anti-government, anti-white supremacists, and anti-police,” was charged by a Florida grand jury with two counts of “transmitting a communication in interstate commerce containing a threat to kidnap or injure.”

The communication in question had been posted by Baker on Facebook, where he had created an event page to organize an armed counter-rally to one planned by Donald Trump supporters at the Florida capital of Tallahassee on January 6. “If you are afraid to die fighting the enemy, then stay in bed and live. Call all of your friends and Rise Up!,” Baker had written on his Facebook event page.

Baker’s case is notable as it is one of the first “precrime” arrests based entirely on social media posts—the logical conclusion of the Trump administration’s, and now Biden administration’s, push to normalize arresting individuals for online posts to prevent violent acts before they can happen. From the increasing sophistication of US intelligence/military contractor Palantir’s predictive policing programs to the formal announcement of the Justice Department’s Disruption and Early Engagement Program in 2019 to Biden’s first budget, which contains $111 million for pursuing and managing “increasing domestic terrorism caseloads,” the steady advance toward a precrime-centered “war on domestic terror” has been notable under every post-9/11 presidential administration.

This new so-called war on domestic terror has actually resulted in many of these types of posts on Facebook. And, while Facebook has long sought to portray itself as a “town square” that allows people from across the world to connect, a deeper look into its apparently military origins and continual military connections reveals that the world’s largest social network was always intended to act as a surveillance tool to identify and target domestic dissent.

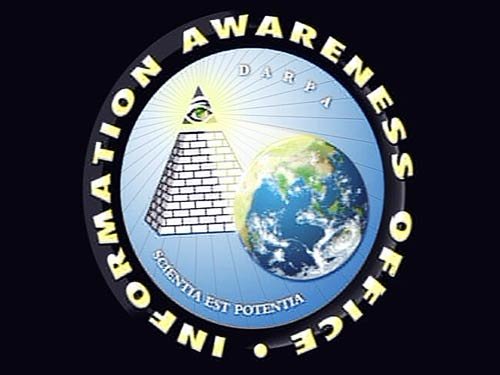

Part 1 of this two-part series on Facebook and the US national-security state explores the social media network’s origins and the timing and nature of its rise as it relates to a controversial military program that was shut down the same day that Facebook launched. The program, known as LifeLog, was one of several controversial post-9/11 surveillance programs pursued by the Pentagon’s Defense Advanced Research Projects Agency (DARPA) that threatened to destroy privacy and civil liberties in the United States while also seeking to harvest data for producing “humanized” artificial intelligence (AI).

As this report will show, Facebook is not the only Silicon Valley giant whose origins coincide closely with this same series of DARPA initiatives and whose current activities are providing both the engine and the fuel for a hi-tech war on domestic dissent.

DARPA’s Data Mining for “National Security” and to “Humanize” AI

In the aftermath of the September 11 attacks, DARPA, in close collaboration with the US intelligence community (specifically the CIA), began developing a “precrime” approach to combatting terrorism known as Total Information Awareness or TIA. The purpose of TIA was to develop an “all-seeing” military-surveillance apparatus. The official logic behind TIA was that invasive surveillance of the entire US population was necessary to prevent terrorist attacks, bioterrorism events, and even naturally occurring disease outbreaks.

The architect of TIA, and the man who led it during its relatively brief existence, was John Poindexter, best known for being Ronald Reagan’s National Security Advisor during the Iran-Contra affair and for being convicted of five felonies in relation to that scandal. A less well-known activity of Iran-Contra figures like Poindexter and Oliver North was their development of the Main Core database to be used in “continuity of government” protocols. Main Core was used to compile a list of US dissidents and “potential troublemakers” to be dealt with if the COG protocols were ever invoked. These protocols could be invoked for a variety of reasons, including widespread public opposition to a US military intervention abroad, widespread internal dissent, or a vaguely defined moment of “national crisis” or “time of panic.” Americans were not informed if their name was placed on the list, and a person could be added to the list for merely having attended a protest in the past, for failing to pay taxes, or for other, “often trivial,” behaviors deemed “unfriendly” by its architects in the Reagan administration.

In light of this, it was no exaggeration when New York Times columnist William Safire remarked that, with TIA, “Poindexter is now realizing his twenty-year dream: getting the ‘data-mining’ power to snoop on every public and private act of every American.”

The TIA program met with considerable citizen outrage after it was revealed to the public in early 2003. TIA’s critics included the American Civil Liberties Union, which claimed that the surveillance effort would “kill privacy in America” because “every aspect of our lives would be catalogued,” while several mainstream media outlets warned that TIA was “fighting terror by terrifying US citizens.” As a result of the pressure, DARPA changed the program’s name to Terrorist Information Awareness to make it sound less like a national-security panopticon and more like a program aiming specifically at terrorists in the post-9/11 era.

The TIA projects were not actually closed down, however, with most moved to the classified portfolios of the Pentagon and US intelligence community. Some became intelligence funded and guided private-sector endeavors, such as Peter Thiel’s Palantir, while others resurfaced years later under the guise of combatting the COVID-19 crisis.

Soon after TIA was initiated, a similar DARPA program was taking shape under the direction of a close friend of Poindexter’s, DARPA program manager Douglas Gage. Gage’s project, LifeLog, sought to “build a database tracking a person’s entire existence” that included an individual’s relationships and communications (phone calls, mail, etc.), their media-consumption habits, their purchases, and much more in order to build a digital record of “everything an individual says, sees, or does.” LifeLog would then take this unstructured data and organize it into “discreet episodes” or snapshots while also “mapping out relationships, memories, events and experiences.”

LifeLog, per Gage and supporters of the program, would create a permanent and searchable electronic diary of a person’s entire life, which DARPA argued could be used to create next-generation “digital assistants” and offer users a “near-perfect digital memory.” Gage insisted, even after the program was shut down, that individuals would have had “complete control of their own data-collection efforts” as they could “decide when to turn the sensors on or off and decide who will share the data.” In the years since then, analogous promises of user control have been made by the tech giants of Silicon Valley, only to be broken repeatedly for profit and to feed the government’s domestic-surveillance apparatus.

The information that LifeLog gleaned from an individual’s every interaction with technology would be combined with information obtained from a GPS transmitter that tracked and documented the person’s location, audio-visual sensors that recorded what the person saw and said, as well as biomedical monitors that gauged the person’s health. Like TIA, LifeLog was promoted by DARPA as potentially supporting “medical research and the early detection of an emerging epidemic.”

Critics in mainstream media outlets and elsewhere were quick to point out that the program would inevitably be used to build profiles on dissidents as well as suspected terrorists. Combined with TIA’s surveillance of individuals at multiple levels, LifeLog went farther by “adding physical information (like how we feel) and media data (like what we read) to this transactional data.” One critic, Lee Tien of the Electronic Frontier Foundation, warned at the time that the programs that DARPA was pursuing, including LifeLog, “have obvious, easy paths to Homeland Security deployments.”

At the time, DARPA publicly insisted that LifeLog and TIA were not connected, despite their obvious parallels, and that LifeLog would not be used for “clandestine surveillance.” However, DARPA’s own documentation on LifeLog noted that the project “will be able . . . to infer the user’s routines, habits and relationships with other people, organizations, places and objects, and to exploit these patterns to ease its task,” which acknowledged its potential use as a tool of mass surveillance.

In addition to the ability to profile potential enemies of the state, LifeLog had another goal that was arguably more important to the national-security state and its academic partners—the “humanization” and advancement of artificial intelligence. In late 2002, just months prior to announcing the existence of LifeLog, DARPA released a strategy document detailing development of artificial intelligence by feeding it with massive floods of data from various sources.

The post-9/11 military-surveillance projects—LifeLog and TIA being only two of them—offered quantities of data that had previously been unthinkable to obtain and that could potentially hold the key to achieving the hypothesized “technological singularity.” The 2002 DARPA document even discusses DARPA’s effort to create a brain-machine interface that would feed human thoughts directly into machines to advance AI by keeping it constantly awash in freshly mined data.

One of the projects outlined by DARPA, the Cognitive Computing Initiative, sought to develop sophisticated artificial intelligence through the creation of an “enduring personalized cognitive assistant,” later termed the Perceptive Assistant that Learns, or PAL. PAL, from the very beginning was tied to LifeLog, which was originally intended to result in granting an AI “assistant” human-like decision-making and comprehension abilities by spinning masses of unstructured data into narrative format.

The would-be main researchers for the LifeLog project also reflect the program’s end goal of creating humanized AI. For instance, Howard Shrobe at the MIT Artificial Intelligence Laboratory and his team at the time were set to be intimately involved in LifeLog. Shrobe had previously worked for DARPA on the “evolutionary design of complex software” before becoming associate director of the AI Lab at MIT and has devoted his lengthy career to building “cognitive-style AI.” In the years after LifeLog was cancelled, he again worked for DARPA as well as on intelligence community–related AI research projects. In addition, the AI Lab at MIT was intimately connected with the 1980s corporation and DARPA contractor called Thinking Machines, which was founded by and/or employed many of the lab’s luminaries—including Danny Hillis, Marvin Minsky, and Eric Lander—and sought to build AI supercomputers capable of human-like thought. All three of these individuals were later revealed to be close associates of and/or sponsored by the intelligence-linked pedophile Jeffrey Epstein, who also generously donated to MIT as an institution and was a leading funder of and advocate for transhumanist-related scientific research.

Soon after the LifeLog program was shuttered, critics worried that, like TIA, it would continue under a different name. For example, Lee Tien of the Electronic Frontier Foundation told VICE at the time of LifeLog’s cancellation, “It would not surprise me to learn that the government continued to fund research that pushed this area forward without calling it LifeLog.”

Along with its critics, one of the would-be researchers working on LifeLog, MIT’s David Karger, was also certain that the DARPA project would continue in a repackaged form. He told Wired that “I am sure such research will continue to be funded under some other title . . . I can’t imagine DARPA ‘dropping out’ of a such a key research area.”

The answer to these speculations appears to lie with the company that launched the exact same day that LifeLog was shuttered by the Pentagon: Facebook.

Thiel Information Awareness

After considerable controversy and criticism, in late 2003, TIA was shut down and defunded by Congress, just months after it was launched. It was only later revealed that that TIA was never actually shut down, with its various programs having been covertly divided up among the web of military and intelligence agencies that make up the US national-security state. Some of it was privatized.

The same month that TIA was pressured to change its name after growing backlash, Peter Thiel incorporated Palantir, which was, incidentally, developing the core panopticon software that TIA had hoped to wield. Soon after Palantir’s incorporation in 2003, Richard Perle, a notorious neoconservative from the Reagan and Bush administrations and an architect of the Iraq War, called TIA’s Poindexter and said he wanted to introduce him to Thiel and his associate Alex Karp, now Palantir’s CEO. According to a report in New York magazine, Poindexter “was precisely the person” whom Thiel and Karp wanted to meet, mainly because “their new company was similar in ambition to what Poindexter had tried to create at the Pentagon,” that is, TIA. During that meeting, Thiel and Karp sought “to pick the brain of the man now widely viewed as the godfather of modern surveillance.”

Soon after Palantir’s incorporation, though the exact timing and details of the investment remain hidden from the public, the CIA’s In-Q-Tel became the company’s first backer, aside from Thiel himself, giving it an estimated $2 million. In-Q-Tel’s stake in Palantir would not be publicly reported until mid-2006.

The money was certainly useful. In addition, Alex Karp told the New York Times in October 2020, “the real value of the In-Q-Tel investment was that it gave Palantir access to the CIA analysts who were its intended clients.” A key figure in the making of In-Q-Tel investments during this period, including the investment in Palantir, was the CIA’s chief information officer, Alan Wade, who had been the intelligence community’s point man for Total Information Awareness. Wade had previously cofounded the post-9/11 Homeland Security software contractor Chiliad alongside Christine Maxwell, sister of Ghislaine Maxwell and daughter of Iran-Contra figure, intelligence operative, and media baron Robert Maxwell.

After the In-Q-Tel investment, the CIA would be Palantir’s only client until 2008. During that period, Palantir’s two top engineers—Aki Jain and Stephen Cohen—traveled to CIA headquarters at Langley, Virginia, every two weeks. Jain recalls making at least two hundred trips to CIA headquarters between 2005 and 2009. During those regular visits, CIA analysts “would test [Palantir’s software] out and offer feedback, and then Cohen and Jain would fly back to California to tweak it.” As with In-Q-Tel’s decision to invest in Palantir, the CIA’s chief information officer during this time remained one of TIA’s architects. Alan Wade played a key role in many of these meetings and subsequently in the “tweaking” of Palantir’s products.

Today, Palantir’s products are used for mass surveillance, predictive policing, and other disconcerting policies of the US national-security state. A telling example is Palantir’s sizable involvement in the new Health and Human Services–run wastewater surveillance program that is quietly spreading across the United States. As noted in a previous Unlimited Hangout report, that system is the resurrection of a TIA program called Biosurveillance. It is feeding all its data into the Palantir-managed and secretive HHS Protect data platform. The decision to turn controversial DARPA-led programs into a private ventures, however, was not limited to Thiel’s Palantir.

The Rise of Facebook

The shuttering of TIA at DARPA had an impact on several related programs, which were also dismantled in the wake of public outrage over DARPA’s post-9/11 programs. One of these programs was LifeLog. As news of the program spread through the media, many of the same vocal critics who had attacked TIA went after LifeLog with similar zeal, with Steven Aftergood of the Federation of American Scientists telling Wired at the time that “LifeLog has the potential to become something like ‘TIA cubed.’” LifeLog being viewed as something that would prove even worse than the recently cancelled TIA had a clear effect on DARPA, which had just seen both TIA and another related program cancelled after considerable backlash from the public and the press.

The firestorm of criticism of LifeLog took its program manager, Doug Gage, by surprise, and Gage has continued to assert that the program’s critics “completely mischaracterized” the goals and ambitions of the project. Despite Gage’s protests and those of LifeLog’s would-be researchers and other supporters, the project was publicly nixed on February 4, 2004. DARPA never provided an explanation for its quiet move to shutter LifeLog, with a spokesperson stating only that it was related to “a change in priorities” for the agency. On DARPA director Tony Tether’s decision to kill LifeLog, Gage later told VICE, “I think he had been burnt so badly with TIA that he didn’t want to deal with any further controversy with LifeLog. The death of LifeLog was collateral damage tied to the death of TIA.”

Fortuitously for those supporting the goals and ambitions of LifeLog, a company that turned out to be its private-sector analogue was born on the same day that LifeLog’s cancellation was announced. On February 4, 2004, what is now the world’s largest social network, Facebook, launched its website and quickly rose to the top of the social media roost, leaving other social media companies of the era in the dust.

A few months into Facebook’s launch, in June 2004, Facebook cofounders Mark Zuckerberg and Dustin Moskovitz brought Sean Parker onto Facebook’s executive team. Parker, previously known for cofounding Napster, later connected Facebook with its first outside investor, Peter Thiel. As discussed, Thiel, at that time, in coordination with the CIA, was actively trying to resurrect controversial DARPA programs that had been dismantled the previous year. Notably, Sean Parker, who became Facebook’s first president, also had a history with the CIA, which recruited him at the age of sixteen soon after he had been busted by the FBI for hacking corporate and military databases. Thanks to Parker, in September 2004, Thiel formally acquired $500,000 worth of Facebook shares and was added its board. Parker maintained close ties to Facebook as well as to Thiel, with Parker being hired as a managing partner of Thiel’s Founders Fund in 2006.

Thiel and Facebook cofounder Mosokvitz became involved outside of the social network long after Facebook’s rise to prominence, with Thiel’s Founder Fund becoming a significant investor in Moskovitz’s company Asana in 2012. Thiel’s longstanding symbiotic relationship with Facebook cofounders extends to his company Palantir, as the data that Facebook users make public invariably winds up in Palantir’s databases and helps drive the surveillance engine Palantir runs for a handful of US police departments, the military, and the intelligence community. In the case of the Facebook–Cambridge Analytica data scandal, Palantir was also involved in utilizing Facebook data to benefit the 2016 Donald Trump presidential campaign.

Today, as recent arrests such as that of Daniel Baker have indicated, Facebook data is slated to help power the coming “war on domestic terror,” given that information shared on the platform is being used in “precrime” capture of US citizens, domestically. In light of this, it is worth dwelling on the point that Thiel’s exertions to resurrect the main aspects of TIA as his own private company coincided with his becoming the first outside investor in what was essentially the analogue of another DARPA program deeply intertwined with TIA.